QCon London 2020 Notes

Last week I attended the QCon London 2020 Conference first time. I think it’s one of the best-rated software engineering conferences in Europe. I had two main expectations from the conference; the first one is inspiring ideas, the other one is experience sharings about some architectural design.

A view scene from the Queen Elizabeth II Centre

The conference was organized very well, it reflected the experience of the team behind it.

Each day, the schedule was divided into separate tracks, like Next Generation Microservices: Building Distributed Systems the Right Way, etc. So I could easily track the related sessions. Every track had a host who is responsible for the whole track sessions, and every morning these hosts introduced their tracks to the whole audience.

The conference mobile application made my life easy during the conference, the application has features like building your schedule, accessing the whole schedule and information about sessions, map of the building, getting notifications, etc.

In this post, I would like to write about tracks/sessions that I attended and had some takeaways.

Microservices

There were not many new things about what people said about microservices, but some of them were important because of the person who said them like Sam Newman. Because he is one of the pioneers in this field and has lots of consultancy experience.

The best one for me was Monzo’s presentation because they presented some of their practices to manage the 1600 microservices. Monzo had similar presentations that I watched online. https://www.theregister.co.uk/2020/03/09/monzo_microservices/

There was a session about Segment’s case, To Microservices and Back Again, basically similar content with this blog post, https://segment.com/blog/goodbye-microservices/.

My takeaways for microservices were;

Golden rules for every solution in software: ‘it depends’, ‘no silver bullet’. So it’s ridiculous to say generic things like ‘monolith is enemy’, ‘monolith is legacy’, ‘microservices is future’, ‘microservices is hype’, etc.

Newman said that microservices should not be the default choice, and it’s not a good choice for most of the startups. He talked about the benefits of a ‘modular monolith’ approach, such as degree of independence to work on modules. He also emphasized that the ‘modular monolith’ approach is highly underrated and If your runtime environment enables you to make hot deployment of modules(like Erlang does), this approach can have many benefits.

There is a loop of problems and solutions; What are your problems?, How will microservices solve these problems?, What will be your new problems with microservices? How will you solve them?… So, use the right tools for the problems and be aware of your new problems with these tools.

One of the most difficult problems of microservices is determining the service boundaries. Domain-Driven Design approach is one of the best approaches to solve this problem.

Build everything incrementally at every level; from writing a little code piece or releasing a little bug fix, to convert your whole architecture. When building microservices, an incremental evolutionary architecture approach can help you much to find the right architecture.

Set the balance between control and autonomy. The extreme points are every team decides own technology and practices and only a central authority decides everything. Monzo had good examples for this one.

Build practices and tools to achieve above ones; TDD, design principles(like DDD), test principles, team rotations, pair programming, incremental release(separate deployment and release), experiment culture, shared infrastructure, enable team to focus business rather than infrastructure, good onboarding documentation, and process, CI/CD pipeline, development/testing environments, etc.

And, evangelize your practices and tools across the company.

I attended to below sessions with related to Microservices Architecture;

- Monolith Decomposition Patterns

- Beyond the Distributed Monolith: Rearchitecting the Big Data Platform

- To Microservices and Back Again

- Modern Banking in 1500 Microservices

Streaming Data Architectures

I attended to the Streaming a Million likes/second: Real-time Interactions on Live Video for the Streaming Data Architectures track.

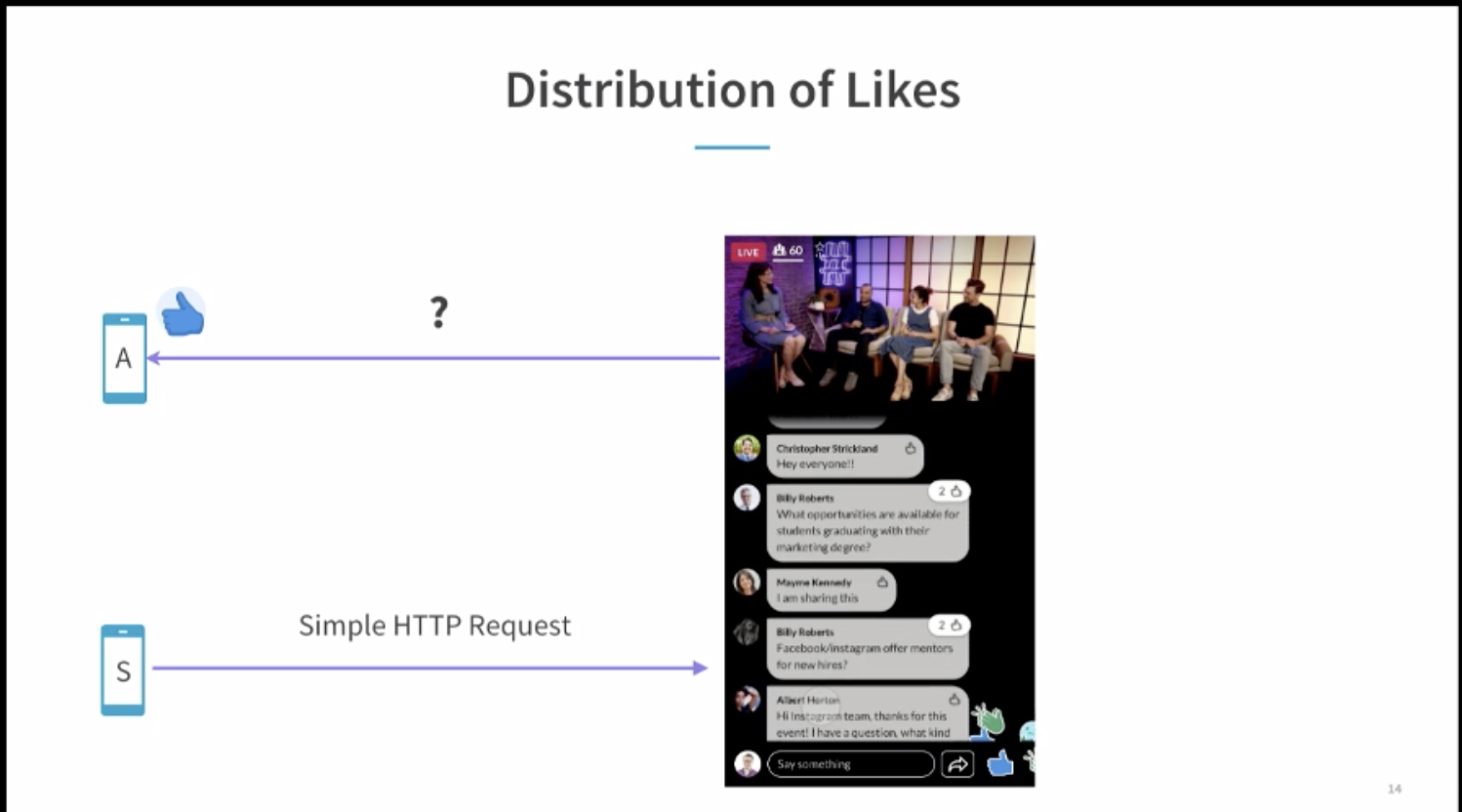

Distribution of Likes

This presentation was about how LinkedIn manages to share events(like comments, concurrent viewer counts) between clients on live videos. Akhilesh Gupta talked about 7 different challenges and scalability problems when they were building their streaming architecture.

These challenges were “the delivery pipeline”, “connection management”, “multiple live videos”, “10K concurrent viewers”, “100 likes/second”, “100 likes/s, 10K Viewers, Distribution of 1M Likes/s”, “add another datacenter”.

This presentation was one of the best one for me, Akhilesh Gupta expressed very clearly how their architecture evolved and they could solve some challenging problems with simple approaches.

I try to give you some details about this presentation. They use persistent connections between consumer and realtime delivery system, these are regular HTTP connections(Http text/event-stream, Server-Sent Events), not WebSockets.

They use Play Framework with Akka Actors. Every client connection has a corresponding Akka actor instance and a supervisor actor decides to which event will be sent to which actors.

Their architecture consists of three main components(layers), these are Frontend nodes, dispatcher nodes and a key-value store(Couchbase, Redis, etc.) to keep the relation between live video and frontend nodes.

Dispatcher nodes decide which event will be sent to which frontend nodes because frontend nodes send subscribe events to dispatcher nodes for the specific live videos when a client connects that frontend nodes for that specific live video.

Clients connect to frontend nodes and these nodes decide which events will be sent to which clients when a dispatcher node publishes an event to these frontend nodes.

For different data centers, they use cross data center publishing of events. When a dispatcher gets an event, it firstly checks it’s data center local key-value storage and then publishes this event to dispatchers in other data centers.

For the scalability part, they can keep about 100K connections per frontend nodes, so they could stream to 18M viewers of the Royal Wedding(Second largest streaming event) with 180 machines. A single dispatcher node can publish 5K events/second. Also, their delivery system’s latency is 75ms at p90.

There is paper of them about how they manage the presence(online/offline) of the clients.

APIs

I attended a few sessions from the track The Future of the API: REST, gRPC, GraphQL, and More. But only Moving Beyond Request-Reply: How Smart APIs Are Different was meaningful for me.

There were some examples of bad user experience because of the system failure and some pattern suggestions to eliminate these bad user experiences. So, “Failure will happen. Accept it! But keep it local! Be resilient.”

One of the examples was issuing a boarding pass for a flight. If you get an error while doing this operation you should try it later, but this’s a bad user experience because as a user you should try the same operation again and again without knowing that system is fixed or not. Maybe you’ll set up a manual reminder to yourself to remind you to try again. Instead of this, the system should behave differently. If the system can’t issue your boarding pass then it should give you feedback something like, “We’re sorry…We are having some technical difficulties and can’t present to you your boarding pass right away. But we do actively retry ourselves, so lean back, relax and we will send it on time”. Related patterns with this are “long-running process/services”, “asynchronous retry” and “stateful retry”.

Some other patterns are “at least once delivery”, “idempotency”, examples from payment transactions for these patterns.

With distributed systems’ complexity, these patterns’ importance is increased. Also, we can use event-driven architecture, events, and commands to decouple our services’ integration. And we should balance choreography and orchestration while executing workflows with microservices.

Architectures

I attended to Tesla Virtual Power Plant for the track Architectures You’ve Always Wondered About.

The presentation was about Tesla’s distributed, reactive, message-driven architecture to manage the network of distributed energy-resources (often solar, wind, and batteries)(VPP, Virtual Power Plant). These resources are used both for the supplement and demand for energy. As far as I understand there two aspects of this management, one of them is the management of assets(devices), another one is gathering telemetry data from those assets, and taking some actions(like alerting) according to these telemetry data.

I noted mostly their technology choices for the problems;

- Websockets to connect with devices,

- Kafka to ingest telemetry data from IoT devices,

- Influxdb to store time-series data,

- PostgreSQL to store assets’ information and control data of assets,

- Akka for microservices, keep the state for each device with Akka,

- Akka streams, Alpakka

- Scala(because of Akka) as a programming language,

- Kubernetes,

- gRPC for service to service communication,

- Protobuf, for stream messages.

Keynotes

The day-1 keynote was The Internet of Things Might Have Less Internet Than We Thought? The talk was about privacy, security, ownership problems of IoT devices that are connected to the Internet. There were some cases for CloudPets, Internet-connected cars, robot vacuum, etc. The solution can be local running devices without cloud but with ML with the technologies like Tensorflow Lite, SparkFun edge, Rasperypie, etc.

The day-2 keynote was Technical Leadership Through the Underground Railroad. This one was about inspiring from the history when we find solutions to our technical leadership problems. He explained how the lessons from the Underground Railroad of the US that was used to escape from slavery can help to solve our current technical leadership problems.

I’m not sure about how we relate the contexts of his examples and our problems but I liked the emphasis of history. I remembered again, many problems that we are facing are not unique to us or our era. Most of them were faced before us and people found some solutions to them. If we know about these solutions we wouldn’t reinvent these solutions.

The day-3 keynote was Interoperability of Open-Source Tools: The Emergence of Interfaces . This one was about the evolution of interfaces within the Kubernetes, like CRI, CNI, CSI, ClusterAPI, SMI.

As a Kubernetes storage SIG and CSI contributor, I’m quite familiar with that content, so it was not much interesting for me, but I liked the presentation style of Katie Gamanji. She was very calm, she reflected her knowledge and self-confidence.

And closing keynote was The Apollo Mindset: Making Mission Impossible, Possible. This one was about the story of the Apollo mission but from different perspectives, like passion, leadership, trust, self-belief, etc.

I remembered the success and failure relationship with this presentation. Most of the time success is not like a thing that you try something that you want to achieve and do it at your first try. It’s more likely, you tried and failed, you tried and failed, you tried and failed, …, you tried and succeed! If you believe in something, if you have a passion to succeed it then you should be ready for many failures. Every failure has many lessons to be learned to move you one step further.

Conclusion

There were 149 total sessions and I attended 21 of them. My expectations from the contents of the sessions that I attended were quite higher than what I found. I tried different strategies when selecting sessions, concepts that I have some knowledge, concepts that I just hear something and wonder a lot, concepts that I don’t know anything, etc. I mentioned about my expectations from the conference, inspiring ideas and practical technical experience sharings. Unfortunately, I almost found nothing for the inspiring, exciting ideas part, and just a few for the latter one.

Apart from the content of sessions, another benefit of conferences is of course networking and talking with other peers about their experiences. Unfortunately, my motivation for networking and talking with other peers was not high enough because of the Covid-19. I generally tried to avoid involving crowds and met just a few persons. I had a long conversation only with the guys from Optimizely in the early morning, before 9 am :).

Attending this kind of conference is not easy for me, because it’s quite expensive. (Maybe it’s too expensive when we compare the monthly minimum wages between the UK and Turkey). I spent almost my 2-year learning budget to attend this conference, so my expectation might be a little higher also for this reason. Anyway, it was a different experience for me for one week.